Esta página aún no está traducida al español. Puede verla sólo en inglés.

In the solar industry today, we face a fundamental challenge: distinguishing between data that are based on physics and validated scientific methodology, and those that are a product of subjective manipulation and legacy approaches. This distinction has a profound impact on the quality of decision-making and the long-term success of projects.

Why is this discussion so important? Because from performance modelling and system design to financial risk assessment, every major decision that developers and investors make rests on the quality of the solar, meteorological, and environmental data. Yet, many projects are still built on low-quality unvalidated datasets.

Some developers use models that rely on empirical, simplified correlations rather than the laws of physics. Others combine data from multiple sources, converting monthly values into synthetic hourly datasets. At their best, these approaches create high uncertainty. At their worst, they produce misleading numbers that result in suboptimal project design and distorted economics.

As the solar industry continues to become a key component of the global power mix, the margin for error is shrinking. Stakeholders demand greater accuracy, transparency, and accountability. The industry must therefore move away from “black-box” data practices and march toward validated, physics-based solar models that can stand up to scientific scrutiny.

Empirical models, by definition, are based on statistical correlations. They can provide rough approximations under specific conditions, but they lack the physical foundation to handle complex geographies. These models are particularly weak in regions with high atmospheric variability.

Some consultants mix and match solar data from different sources and convert them to synthetically generated hourly data. These approaches are subjective, lack transparency and result in unrealistic, misleading data products. Their results cannot be independently reproduced or verified against ground measurements. Key physical relationships such as the balance between global, direct, and diffuse irradiance are often broken. Using such data is like trying to sit on a three-legged stool with mismatched lengths of its legs.

Empirical approaches can be easily tuned to best support the business case. Data cherry-picking and empirical methodologies have little to do with science. They expose projects to the risk of underperformance or damage.

Physics doesn’t bend to a business case. Physics shows us the truth; regardless of whether we like it or not.

Physics-based models follow the fundamental radiative laws in the atmosphere. They simulate how sunlight travels through the atmosphere, accounting for cloud dynamics, aerosols, water vapor, and local terrain effects. These models draw on a number of global inputs and rigorous scientific equations – combining data from meteorological satellites, global weather models, and other geospatial datasets.

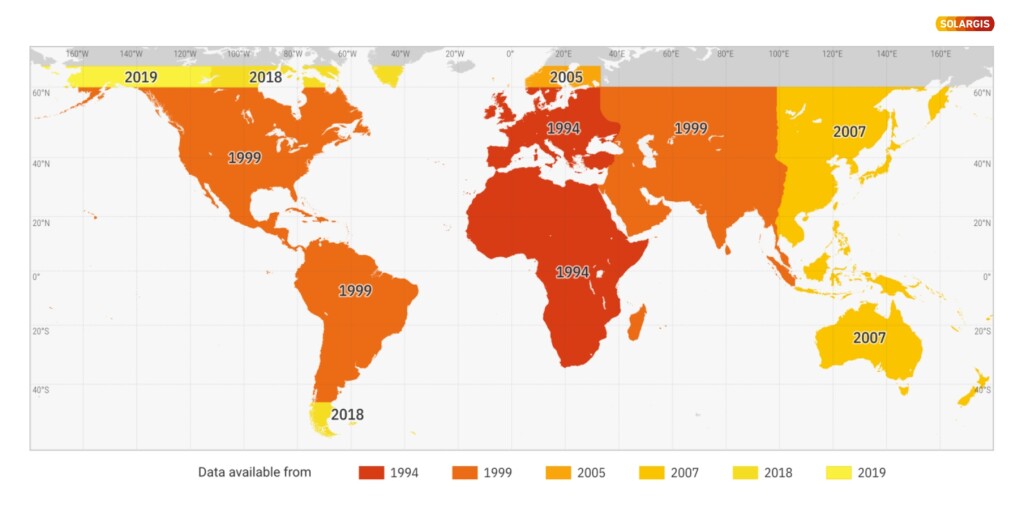

The highest-quality solar, meteorological, and environmental data come from validated physics-based solar models that deliver consistent accuracy across all climates and geographies. Solargis provides high-resolution, satellite-based data for all inhabited regions between 60°S and 65°N, with historical coverage extending back decades, depending on location. Image source: Solargis.

The rigorous physics-based models do not require further manipulation to produce acceptable results. Physics-based models are designed to perform accurately across all climate zones and produce solar irradiance estimates that are physically consistent, traceable, and reproducible. They can be validated against high-quality ground measurements and refined for site-specific conditions by means of rigorous model adaptation – not speculative tuning.

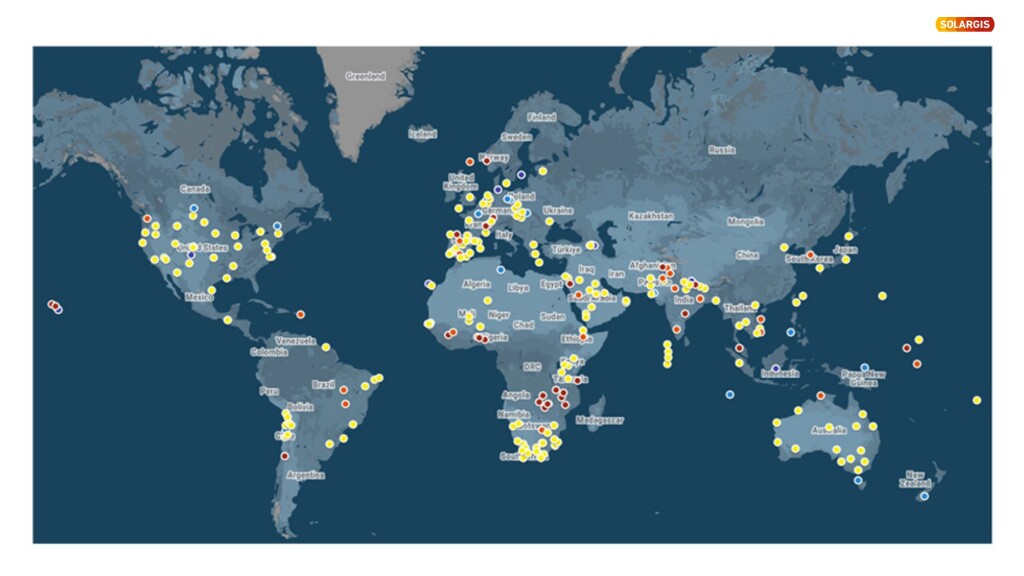

The comparison of model outputs with ground-measured data from reference stations ensures the accuracy of solar models and reduces uncertainty across all climates. Solargis validates its model using high-quality ground measurements from over 320 public stations worldwide. Image source: Solargis.

Machine learning techniques are now increasingly used to complement these models. Rather than replacing physics – they enhance it, helping to fill gaps in data relations and capture subtle patterns that emerge from vast datasets. The result is a new generation of solar models that are more accurate and robust than ever before.

Financial stakeholders increasingly demand this level of scientific rigor, and rightly so. They now require objective, physics-based simulations that are transparent and can be independently validated. Many even request formal ‘Reliance Letters’ as evidence that the data and modeling practices behind a project’s financial case are science-driven and free from commercial bias. This shift is helping to eliminate providers that offer unverified or inconsistent data, improving overall credibility across the market.

Over the past 20 years, the solar data industry has advanced significantly from the days when the Linke Turbidity Factor was a common method to approximate the impact of atmospheric aerosols on the amount of sunlight reaching the ground.

This atmospheric parameter provided only a rough approximation of aerosol attenuation (how "polluted" the atmosphere is) and relied on sparse clear-sky measurements, often limited to monthly averages. These models also had low geographic availability, and its poor resolution made it unsuitable for many locations.

Thanks to modern weather-observation systems, cloud-based computing, deep knowledge and long experience in the field, today’s generation of models are far more advanced. They simulate the transmission of solar radiation through the atmosphere using detailed atmospheric data and complex cloud interactions sourced from satellite observation systems and global weather models. This enables accurate solar irradiance estimates even in complex geographies with high atmospheric and cloud variability, such as West Africa, Indonesia, Northern India, or the Amazon Basin. Years of experience in validating these models against ground measurements have been instrumental in making them more reliable and widely applicable.

The modern solar radiation models are also much more sensitive. They can detect local anomalies like wildfire smoke or industrial haze, but that sensitivity also demands care: the more advanced the model, the more it relies on high-quality, and high-resolution input data. Moreover, these models need expert calibration and regular monitoring and maintenance.

The combination of physics and the newest technology results in PV systems that perform reliably in practice, not only in theory.

If you invest in physics-based, scientifically validated solar radiation data, you avoid surprises from subjectively manipulated and unvalidated data. And most importantly, you have an economically sound and profitable solar power plant that performs as expected, passes due diligence, and withstands scrutiny from investors, banks, and grid operators.

PV developers are often offered several datasets to choose the one that best justifies their business case. Some consultants go a step further, combining values from diverse sources into artificial constructions and offer it to clients. These approaches may yield optimistic numbers in the project financing stage, but they lack scientific rigor, integrity, and open the door to frustrating surprises in the future.

That being said, a single, high-quality dataset built on fundamental atmospheric principles, validated with ground measurements, and consistent across geographies and time – is all you need, whether you manage one, or a portfolio of PV power plants.

While patchwork approaches may seem to offer flexibility, in reality they break the physical integrity of datasets, introduce inconsistencies, and increase uncertainty. A single dataset that respects the laws of physics will always outperform even the most elaborate mosaic of empirical assumptions. And most importantly, it works everywhere consistently, from Texas to Tokyo.

Finally, here’s a brief checklist of the key criteria to consider when choosing a provider of solar, meteorological, and environmental data.

What to demand from your data provider:

What to avoid:

By holding data providers and consultants to these standards, developers can ensure their projects are backed by science, not assumptions – leading to more reliable performance, stronger financial outcomes, and greater trust across the solar industry.